publications

publications by year in reverse chronological order.

2023

- A Novel Statistical Methodology for Quantifying the Spatial Arrangements of Axons in Peripheral NervesAbida Sanjana Shemonti, Emanuele Plebani, Natalia P. Biscola, Deborah M. Jaffey, Leif A. Havton, Janet R. Keast, Alex Pothen, M. Murat Dundar, Terry L. Powley, and Bartek RajwaFrontiers in Neuroscience, 2023

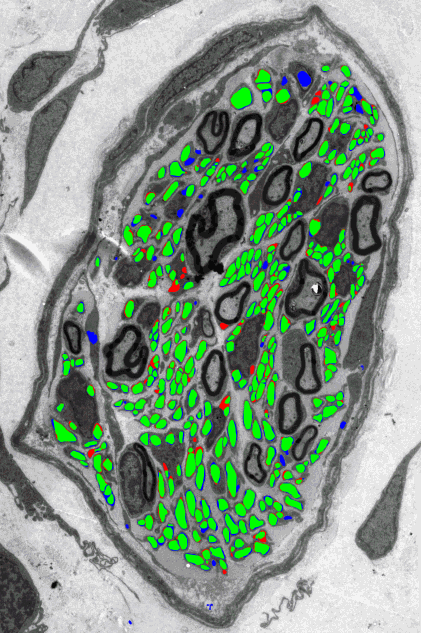

A thorough understanding of the neuroanatomy of peripheral nerves is required for a better insight into their function and the development of neuromodulation tools and strategies. In biophysical modeling, it is commonly assumed that the complex spatial arrangement of myelinated and unmyelinated axons in peripheral nerves is random, however, in reality the axonal organization is inhomogeneous and anisotropic. Present quantitative neuroanatomy methods analyze peripheral nerves in terms of the number of axons and the morphometric characteristics of the axons, such as area and diameter. In this study, we employed spatial statistics and point process models to describe the spatial arrangement of axons and Sinkhorn distances to compute the similarities between these arrangements (in terms of first- and second-order statistics) in various vagus and pelvic nerve cross-sections. We utilized high-resolution transmission electron microscopy (TEM) images that have been segmented using a custom-built high-throughput deep learning system based on a highly modified U-Net architecture. Our findings show a novel and innovative approach to quantifying similarities between spatial point patterns using metrics derived from the solution to the optimal transport problem. We also present a generalizable pipeline for quantitative analysis of peripheral nerve architecture. Our data demonstrate differences between male- and female-originating samples and similarities between the pelvic and abdominal vagus nerves.

@article{Shemonti2023novel, title = {A Novel Statistical Methodology for Quantifying the Spatial Arrangements of Axons in Peripheral Nerves}, author = {Shemonti, Abida Sanjana and Plebani, Emanuele and Biscola, Natalia P. and Jaffey, Deborah M. and Havton, Leif A. and Keast, Janet R. and Pothen, Alex and Dundar, M. Murat and Powley, Terry L. and Rajwa, Bartek}, year = {2023}, journal = {Frontiers in Neuroscience}, volume = {17}, issn = {1662-453X}, doi = {10.3389/fnins.2023.1072779}, }

2022

- Textflow: Toward Supporting Screen-free Manipulation of Situation-Relevant Smart MessagesACM Transactions on Interactive Intelligent Systems, Nov 2022

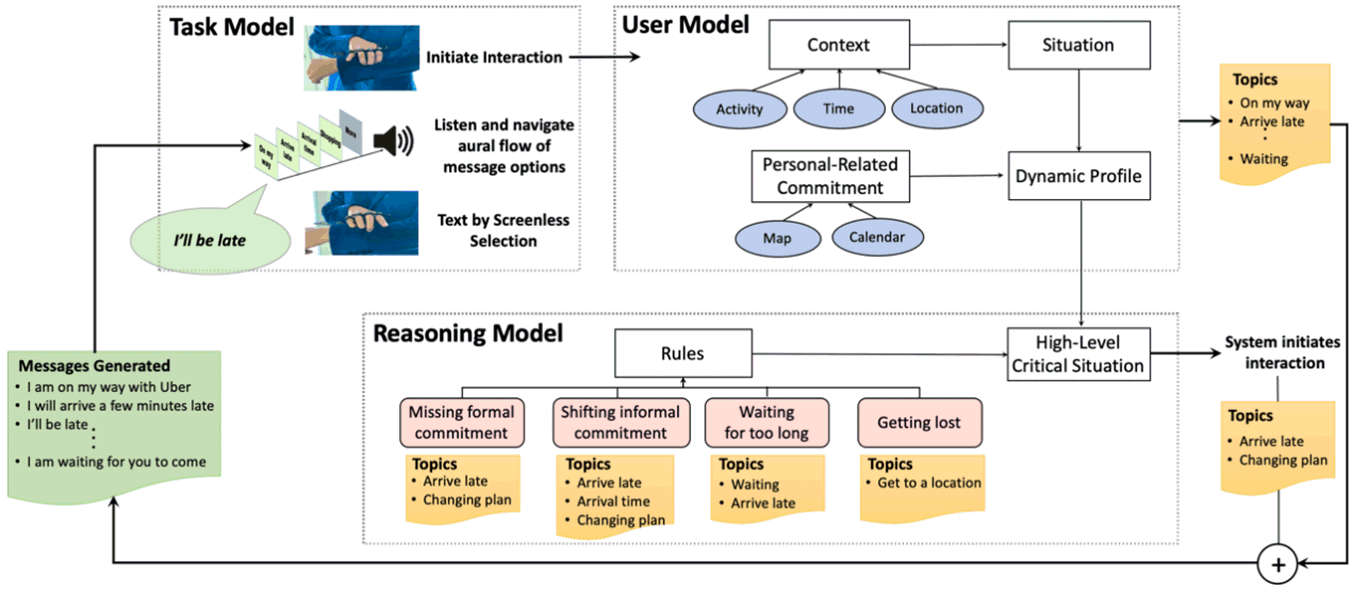

Texting relies on screen-centric prompts designed for sighted users, still posing significant barriers to people who are blind and visually impaired (BVI). Can we re-imagine texting untethered from a visual display? In an interview study, 20 BVI adults shared situations surrounding their texting practices, recurrent topics of conversations, and challenges. Informed by these insights, we introduce TextFlow, a mixed-initiative context-aware system that generates entirely auditory message options relevant to the users’ location, activity, and time of the day. Users can browse and select suggested aural messages using finger-taps supported by an off-the-shelf finger-worn device without having to hold or attend to a mobile screen. In an evaluative study, 10 BVI participants successfully interacted with TextFlow to browse and send messages in screen-free mode. The experiential response of the users shed light on the importance of bypassing the phone and accessing rapidly controllable messages at their fingertips while preserving privacy and accuracy with respect to speech or screen-based input. We discuss how non-visual access to proactive, contextual messaging can support the blind in a variety of daily scenarios.

@article{Karimi2022Textflow, title = {Textflow: {{Toward Supporting Screen-free Manipulation}} of {{Situation-Relevant Smart Messages}}}, shorttitle = {Textflow}, author = {Karimi, Pegah and Plebani, Emanuele and Martin-Hammond, Aqueasha and Bolchini, Davide}, year = {2022}, month = nov, journal = {ACM Transactions on Interactive Intelligent Systems}, volume = {12}, number = {4}, pages = {31:1--31:29}, issn = {2160-6455}, doi = {10.1145/3519263}, } -

High-Throughput Segmentation of Unmyelinated Axons by Deep LearningEmanuele Plebani, Natalia P. Biscola, Leif A. Havton, Bartek Rajwa, Abida Sanjana Shemonti, Deborah Jaffey, Terry Powley, Janet R. Keast, Kun-Han Lu, and M. Murat DundarScientific Reports, Jan 2022

High-Throughput Segmentation of Unmyelinated Axons by Deep LearningEmanuele Plebani, Natalia P. Biscola, Leif A. Havton, Bartek Rajwa, Abida Sanjana Shemonti, Deborah Jaffey, Terry Powley, Janet R. Keast, Kun-Han Lu, and M. Murat DundarScientific Reports, Jan 2022Axonal characterizations of connectomes in healthy and disease phenotypes are surprisingly incomplete and biased because unmyelinated axons, the most prevalent type of fibers in the nervous system, have largely been ignored as their quantitative assessment quickly becomes unmanageable as the number of axons increases. Herein, we introduce the first prototype of a high-throughput processing pipeline for automated segmentation of unmyelinated fibers. Our team has used transmission electron microscopy images of vagus and pelvic nerves in rats. All unmyelinated axons in these images are individually annotated and used as labeled data to train and validate a deep instance segmentation network. We investigate the effect of different training strategies on the overall segmentation accuracy of the network. We extensively validate the segmentation algorithm as a stand-alone segmentation tool as well as in an expert-in-the-loop hybrid segmentation setting with preliminary, albeit remarkably encouraging results. Our algorithm achieves an instance-level \(F_1\) score of between 0.7 and 0.9 on various test images in the stand-alone mode and reduces expert annotation labor by 80% in the hybrid setting. We hope that this new high-throughput segmentation pipeline will enable quick and accurate characterization of unmyelinated fibers at scale and become instrumental in significantly advancing our understanding of connectomes in both the peripheral and the central nervous systems.

@article{Plebani2022Highthroughput, title = {High-Throughput Segmentation of Unmyelinated Axons by Deep Learning}, author = {Plebani, Emanuele and Biscola, Natalia P. and Havton, Leif A. and Rajwa, Bartek and Shemonti, Abida Sanjana and Jaffey, Deborah and Powley, Terry and Keast, Janet R. and Lu, Kun-Han and Dundar, M. Murat}, year = {2022}, month = jan, journal = {Scientific Reports}, volume = {12}, number = {1}, pages = {1198}, publisher = {Nature Publishing Group}, issn = {2045-2322}, doi = {10.1038/s41598-022-04854-3}, langid = {english}, } - A Machine Learning Toolkit for CRISM Image AnalysisIcarus, Apr 2022

Hyperspectral images collected by remote sensing have played a significant role in the discovery of aqueous alteration minerals, which in turn have important implications for our understanding of the changing habitability on Mars. Traditional spectral analyzes based on summary parameters have been helpful in converting hyperspectral cubes into readily visualizable three channel maps highlighting high-level mineral composition of the Martian terrain. These maps have been used as a starting point in the search for specific mineral phases in images. Although the amount of labor needed to verify the presence of a mineral phase in an image is quite limited for phases that emerge with high abundance, manual processing becomes laborious when the task involves determining the spatial extent of detected phases or identifying small outcrops of secondary phases that appear in only a few pixels within an image. Thanks to extensive use of remote sensing data and rover expeditions, significant domain knowledge has accumulated over the years about mineral composition of several regions of interest on Mars, which allow us to collect reliable labeled data required to train machine learning algorithms. In this study we demonstrate the utility of machine learning in two essential tasks for hyperspectral data analysis: nonlinear noise removal and mineral classification. We develop a simple yet effective hierarchical Bayesian model for estimating distributions of spectral patterns and extensively validate this model for mineral classification on several test images. Our results demonstrate that machine learning can be highly effective in exposing tiny outcrops of specific phases in orbital data that are not uncovered by traditional spectral analysis. We package implemented scripts, documentation illustrating use cases, and pixel-scale training data collected from dozens of well-characterized images into a new toolkit. We hope that this new toolkit will provide advanced and effective processing tools and improve community’s ability to map compositional units in remote sensing data quickly, accurately, and at scale.

@article{Plebani2022machine, title = {A Machine Learning Toolkit for {{CRISM}} Image Analysis}, author = {Plebani, Emanuele and Ehlmann, Bethany L. and Leask, Ellen K. and Fox, Valerie K. and Dundar, M. Murat}, year = {2022}, month = apr, journal = {Icarus}, volume = {376}, pages = {114849}, issn = {0019-1035}, doi = {10.1016/j.icarus.2021.114849}, }

2021

-

Textflow: Screenless Access to Non-Visual Smart MessagingPegah Karimi, Emanuele Plebani, and Davide BolchiniIn Proceedings of the 26th International Conference on Intelligent User Interfaces, Apr 2021

Textflow: Screenless Access to Non-Visual Smart MessagingPegah Karimi, Emanuele Plebani, and Davide BolchiniIn Proceedings of the 26th International Conference on Intelligent User Interfaces, Apr 2021Texting relies on screen-centric prompts designed for sighted users, still posing significant barriers to people who are blind and visually impaired (BVI). Can we re-imagine texting untethered from a visual display? In an interview study, 20 BVI adults shared situations surrounding their texting practices, recurrent topics of conversations, and challenges. Informed by these insights, we introduce TextFlow: a mixed-initiative context-aware system that generates entirely auditory message options relevant to the users’ location, activity, and time of the day. Users can browse and select suggested aural messages using finger-taps supported by an off-the-shelf finger-worn device, without having to hold or attend to a mobile screen. In an evaluative study, 10 BVI participants successfully interacted with TextFlow to browse and send messages in screen-free mode. The experiential response of the users shed light on the importance of bypassing the phone and accessing rapidly controllable messages at their fingertips while preserving privacy and accuracy with respect to speech or screen-based input. We discuss how non-visual access to proactive, contextual messaging can support the blind in a variety of daily scenarios.

@inproceedings{karimi_textflow_2021, address = {New York, NY, USA}, series = {{IUI} '21}, title = {Textflow: {Screenless} {Access} to {Non}-{Visual} {Smart} {Messaging}}, isbn = {978-1-4503-8017-1}, doi = {10.1145/3397481.3450697}, booktitle = {Proceedings of the 26th {International} {Conference} on {Intelligent} {User} {Interfaces}}, author = {Karimi, Pegah and Plebani, Emanuele and Bolchini, Davide}, month = apr, year = {2021}, keywords = {Text entry, Assistive technologies, Aural navigation, Intelligent wearable and mobile interfaces, Ubiquitous smart environments}, pages = {186--196}, }

2020

- A Novel, Highly Integrated Simulator for Parallel and Distributed SystemsNikolaos Tampouratzis, Ioannis Papaefstathiou, Antonios Nikitakis, Andreas Brokalakis, Stamatis Andrianakis, Apostolos Dollas, Marco Marcon, and Emanuele PlebaniACM Transactions on Architecture and Code Optimization, Mar 2020

In an era of complex networked parallel heterogeneous systems, simulating independently only parts, components, or attributes of a system-under-design is a cumbersome, inaccurate, and inefficient approach. Moreover, by considering each part of a system in an isolated manner, and due to the numerous and highly complicated interactions between the different components, the system optimization capabilities are severely limited. The presented fully-distributed simulation framework (called as COSSIM) is the first known open-source, high-performance simulator that can handle holistically system-of-systems including processors, peripherals and networks; such an approach is very appealing to both Cyber Physical Systems (CPS) and Highly Parallel Heterogeneous Systems designers and application developers. Our highly integrated approach is further augmented with accurate power estimation and security sub-tools that can tap on all system components and perform security and robustness analysis of the overall system under design—something that was unfeasible up to now. Additionally, a sophisticated Eclipse-based Graphical User Interface (GUI) has been developed to provide easy simulation setup, execution, and visualization of results. COSSIM has been evaluated when executing the widely used Netperf benchmark suite as well as a number of real-world applications. Final results demonstrate that the presented approach has up to 99% accuracy (when compared with the performance of the real system), while the overall simulation time can be accelerated almost linearly with the number of CPUs utilized by the simulator.

@article{tampouratzis_novel_2020, title = {A {Novel}, {Highly} {Integrated} {Simulator} for {Parallel} and {Distributed} {Systems}}, volume = {17}, issn = {1544-3566}, doi = {10.1145/3378934}, number = {1}, journal = {ACM Transactions on Architecture and Code Optimization}, author = {Tampouratzis, Nikolaos and Papaefstathiou, Ioannis and Nikitakis, Antonios and Brokalakis, Andreas and Andrianakis, Stamatis and Dollas, Apostolos and Marcon, Marco and Plebani, Emanuele}, month = mar, year = {2020}, pages = {2:1--2:28}, }

2019

- Intelligent Recognition of TCP Intrusions for Embedded Micro-controllersRemi Varenne, Jean Michel Delorme, Emanuele Plebani, Danilo Pau, and Valeria TomaselliIn International Conference on Image Analysis and Processing, 2019

IoT end-user devices are attractive and sometime easy targets for attackers, because they are often vulnerable in different aspects. Cyberattacks, started from those devices, can easily disrupt the availability of services offered by major internet companies. People that commonly get access to them across the world may experience abrupt interruption of services they use. In that context, this paper describes an embedded prototype to classify intrusions, affecting TCP packets. The proposed solution adopts an Artificial Neural Network (ANN) executed on resource-constrained and low-cost embedded micro controllers. The prototype operates without the need of remote intelligence assist. The adoption of an on-the-edge artificial intelligence architecture brings advantages such as responsiveness, promptness and low power consumption. The embedded intelligence is trained by using the well-known KDD Cup 1999 dataset, properly balanced on 5 types of labelled intrusions patterns. A pre-trained ANN classifies features extracted from TCP packets. The results achieved in this paper refer to the application running on the low cost widely available Nucleo STM32 micro controller boards from STMicroelectronics, featuring a F3 chip running at 72 MHz and a F4 chip running at 84 MHz with small embedded RAM and Flash memory.

@inproceedings{Varenne2019Intelligent, title = {Intelligent {{Recognition}} of {{TCP Intrusions}} for {{Embedded Micro-controllers}}}, booktitle = {International {{Conference}} on {{Image Analysis}} and {{Processing}}}, author = {Varenne, Remi and Delorme, Jean Michel and Plebani, Emanuele and Pau, Danilo and Tomaselli, Valeria}, year = {2019}, volume = {11808 LNCS}, pages = {361--373}, publisher = {Springer Verlag}, address = {Trento}, issn = {16113349}, doi = {10.1007/978-3-030-30754-7_36}, isbn = {978-3-030-30753-0}, }

2018

- COSSIM: An Open-Source Integrated Solution to Address the Simulator Gap for Systems of SystemsAndreas Brokalakis, Nikolaos Tampouratzis, Antonios Nikitakis, Ioannis Papaefstathiou, Stamatis Andrianakis, Danilo Pau, Emanuele Plebani, Marco Paracchini, Marco Marcon, Ioannis Sourdis, Prajith Ramakrishnan Geethakumari, Maria Carmen Palacios, Miguel Angel Anton, and Attila SzaszIn 2018 21st Euromicro Conference on Digital System Design (DSD), Aug 2018

In an era of complex networked heterogeneous systems, simulating independently only parts, components or attributes of a system under design is not a viable, accurate or efficient option. The interactions are too many and too complicated to produce meaningful results and the optimization opportunities are severely limited when considering each part of a system in an isolated manner. The presented COSSIM simulation framework is the first known open-source, high-performance simulator that can handle holistically system-of-systems including processors, peripherals and networks; such an approach is very appealing to both CPS/IoT and Highly Parallel Heterogeneous Systems designers and application developers. Our highly integrated approach is further augmented with accurate power estimation and security sub-tools that can tap on all system components and perform security and robustness analysis of the overall networked system. Additionally, a GUI has been developed to provide easy simulation set-up, execution and visualization of results. COSSIM has been evaluated using real-world applications representing cloud (mobile visual search) and CPS systems (building management) demonstrating high accuracy and performance that scales almost linearly with the number of CPUs dedicated to the simulator.

@inproceedings{Brokalakis2018COSSIM, title = {{{COSSIM}}: {{An Open-Source Integrated Solution}} to {{Address}} the {{Simulator Gap}} for {{Systems}} of {{Systems}}}, shorttitle = {{{COSSIM}}}, booktitle = {2018 21st {{Euromicro Conference}} on {{Digital System Design}} ({{DSD}})}, author = {Brokalakis, Andreas and Tampouratzis, Nikolaos and Nikitakis, Antonios and Papaefstathiou, Ioannis and Andrianakis, Stamatis and Pau, Danilo and Plebani, Emanuele and Paracchini, Marco and Marcon, Marco and Sourdis, Ioannis and Geethakumari, Prajith Ramakrishnan and Palacios, Maria Carmen and Anton, Miguel Angel and Szasz, Attila}, year = {2018}, month = aug, pages = {115--120}, doi = {10.1109/DSD.2018.00033}, } - An Open-Source Extendable, Highly-Accurate and Security Aware Simulator for Cloud ApplicationsAndreas Brokalakis, Nikolaos Tampouratzis, Antonios Nikitakis, Ioannis Papaefstathiou, Stamatis Andrianakis, Apostolos Dollas, Marco Paracchini, Marco Marcon, Danilo Pietro Pau, and Emanuele Plebani21st Conference on Innovation in Clouds, Internet and Networks, ICIN 2018, 2018

In this demo, we present COSSIM, an open-source simulation framework for cloud applications. Our solution models the client and server computing devices as well as the network that comprise the overall system and thus provides cycle accurate results, realistic communications and power/energy consumption estimates based on the actual dynamic usage scenarios. The simulator provides the necessary hooks to security testing software and can be extended through an IEEE standardized interface to include additional tools, such as simulators of physical models. The application that will be used to demonstrate COSSIM is mobile visual search, where mobile nodes capture images, extract their compressed representation and dispatch a query to the cloud. A server compares the received query to a local database and sends back some of the corresponding results.

@article{Brokalakis2018opensource, title = {An Open-Source Extendable, Highly-Accurate and Security Aware Simulator for Cloud Applications}, author = {Brokalakis, Andreas and Tampouratzis, Nikolaos and Nikitakis, Antonios and Papaefstathiou, Ioannis and Andrianakis, Stamatis and Dollas, Apostolos and Paracchini, Marco and Marcon, Marco and Pau, Danilo Pietro and Plebani, Emanuele}, year = {2018}, journal = {21st Conference on Innovation in Clouds, Internet and Networks, ICIN 2018}, pages = {1--3}, publisher = {IEEE}, issn = {2472-8144}, doi = {10.1109/ICIN.2018.8401578}, isbn = {9781538634585}, } - Pre-Trainable Reservoir Computing with Recursive Neural GasLuca Carcano, Emanuele Plebani, Danilo Pietro Pau, and Marco PiastraJul 2018

Echo State Networks (ESN) are a class of Recurrent Neural Networks (RNN) that has gained substantial popularity due to their effectiveness, ease of use and potential for compact hardware implementation. An ESN contains the three network layers input, reservoir and readout where the reservoir is the truly recurrent network. The input and reservoir layers of an ESN are initialized at random and never trained afterwards and the training of the ESN is applied to the readout layer only. The alternative of Recursive Neural Gas (RNG) is one of the many proposals of fully-trainable reservoirs that can be found in the literature. Although some improvements in performance have been reported with RNG, to the best of authors’ knowledge, no experimental comparative results are known with benchmarks for which ESN is known to yield excellent results. This work describes an accurate model of RNG together with some extensions to the models presented in the literature and shows comparative results on three well-known and accepted datasets. The experimental results obtained show that, under specific circumstances, RNG-based reservoirs can achieve better performance.

@article{Carcano2018Pretrainable, title = {Pre-Trainable {{Reservoir Computing}} with {{Recursive Neural Gas}}}, author = {Carcano, Luca and Plebani, Emanuele and Pau, Danilo Pietro and Piastra, Marco}, year = {2018}, month = jul, } - Parallelized Convolutions for Embedded Ultra Low Power Deep Learning SoCLorenzo Cunial, Ahmet Erdem, Cristina Silvano, Mirko Falchetto, Andrea C. Ornstein, Emanuele Plebani, Giuseppe Desoli, and Danilo PauIn IEEE 4th International Forum on Research and Technologies for Society and Industry, RTSI 2018 - Proceedings, Nov 2018

Deep Convolutional Neural Networks (DCNNs) achieve state of the art results compared to classic machine learning in many applications that need recognition, identification and classification. An ever-increasing embedded deployment of DCNNs inference engines thus supporting the intelligence close to the sensor paradigm has been observed, overcoming limitations of cloud-based computing as bandwidth requirements, security, privacy, scalability, and responsiveness. However, increasing the robustness and accuracy of DCNNs comes at the price of increased computational cost. As result, implementing CNNs on embedded devices with real-time constraints is a challenge if the lowest power consumption shall be achieved. A solution to the challenge is to take advantage of the intra-device massive fine grain parallelism offered by these systems and benefit from the extensive concurrency exhibited by DCNN processing pipelines. The trick is to divide intensive tasks into smaller, weakly interacting batches subject to parallel processing. Referred to that, this paper has mainly two goals: 1) describe the implementation of a state-of-art technique to map DCNN most intensive tasks (dominated by multiply-and-accumulate ops) onto Orlando SoC, an ultra-low power heterogeneous multi cores developed by STMicroelectronics; 2) integrate the proposed implementation on a toolchain that allows deep learning developers to deploy DCNNs on low-power applications.

@inproceedings{Cunial2018Parallelized, title = {Parallelized {{Convolutions}} for {{Embedded Ultra Low Power Deep Learning SoC}}}, booktitle = {{{IEEE}} 4th {{International Forum}} on {{Research}} and {{Technologies}} for {{Society}} and {{Industry}}, {{RTSI}} 2018 - {{Proceedings}}}, author = {Cunial, Lorenzo and Erdem, Ahmet and Silvano, Cristina and Falchetto, Mirko and Ornstein, Andrea C. and Plebani, Emanuele and Desoli, Giuseppe and Pau, Danilo}, year = {2018}, month = nov, publisher = {{Institute of Electrical and Electronics Engineers Inc.}}, doi = {10.1109/RTSI.2018.8548362}, isbn = {978-1-5386-6282-3}, } - Low-Power Design of a Gravity Rotation Module for HAR Systems Based on Inertial SensorsAntonio De Vita, Gian Domenico Licciardo, Luigi Di Benedetto, Danilo Pau, Emanuele Plebani, and Angelo BoscoProceedings of the International Conference on Application-Specific Systems, Architectures and Processors, 2018

In this paper, for the first time the design of a HW module to eliminate the effect of the gravity acceleration from data acquired from inertial sensors is presented. A new ’hardware friendly’ algorithm has been derived from the Rodrigues’ rotation formula, which can be implemented in a more compact iterative structure. By exploiting 32-bit floating-point arithmetic, the design is able to combine high accuracy and low power requirements needed by any intelligent Human Activity Recognition system, based on artificial neural networks. Synthesis with 65 nm CMOS std cells returns a power dissipation below 2 \(μ\)W and an area of about 0.05 mm2, Results are the current state-of-the-art for this kind of system and they are very promising for the future integration in smart sensors for wearable applications.

@article{DeVita2018Lowpower, title = {Low-Power {{Design}} of a {{Gravity Rotation Module}} for {{HAR Systems Based}} on {{Inertial Sensors}}}, author = {De Vita, Antonio and Licciardo, Gian Domenico and Di Benedetto, Luigi and Pau, Danilo and Plebani, Emanuele and Bosco, Angelo}, year = {2018}, journal = {Proceedings of the International Conference on Application-Specific Systems, Architectures and Processors}, volume = {2018-July}, pages = {1--4}, publisher = {IEEE}, issn = {10636862}, doi = {10.1109/ASAP.2018.8445130}, isbn = {9781538674796}, } - Efficient Light Harvesting for Accurate Neural Classification of Human ActivitiesAlessandro Nicosia, Danilo Pau, Davide Giacalone, Emanuele Plebani, Angelo Bosco, and Antonio Iacchetti2018 IEEE International Conference on Consumer Electronics, ICCE 2018, 2018

Energy autonomy extension of wearable devices is an ever increasing user need and it can be achieved by inexpensive energy harvesting from the broadly available solar and artificial light. However efficient conversion, relevant storage and utilization must be carefully implemented if the device supports power-hungry applications such as Artificial Intelligence for human activity classification based on Artificial Neural Networks. In this paper, a whole hardware and software system implementation is presented, which is able to achieve system autonomy extension and at the same time high classification accuracy. Quantitative and qualitative results are shown under real working conditions.

@article{Nicosia2018Efficient, title = {Efficient Light Harvesting for Accurate Neural Classification of Human Activities}, author = {Nicosia, Alessandro and Pau, Danilo and Giacalone, Davide and Plebani, Emanuele and Bosco, Angelo and Iacchetti, Antonio}, year = {2018}, journal = {2018 IEEE International Conference on Consumer Electronics, ICCE 2018}, volume = {2018-Janua}, pages = {1--4}, doi = {10.1109/ICCE.2018.8326103}, isbn = {9781538630259}, } - Automated Generation of a Single Shot Detector C Library from High Level Deep Learning FrameworksIn IEEE 4th International Forum on Research and Technologies for Society and Industry, RTSI 2018 - Proceedings, Nov 2018

In designing accurate and high-performance artificial neural networks (ANN) topologies for embedded systems, several weeks of engineering work are required to properly condition the input data, implement architectures optimized both in term of memory and operations using any of the off-the-shelf deep learning frameworks and then test the models in proper-scale data driven experiments. Moreover, code implementing the layers needs to be mapped and validated on the target systems, requiring additional months of hard and hand-made engineering work. To shorten this inefficient and un-productive development procedure, an efficient and automated C library generation workflow has been created. This work presents the phases involved in the automated mapping of a Single Shot Object Detector (SSD) model on an embedded library, where low power consumption is the focus and memory usage needs to be properly handled and minimized when possible. The implementation aspects, dealing with the mapping of high-level functions and dynamic data structures into low-level logical equivalents in ANSI C are presented; in addition, a brief explanation of the validation process as well as a short summary on the link between memory usage and the implementations details of the detector are also provided.

@inproceedings{Ranalli2018Automated, title = {Automated {{Generation}} of a {{Single Shot Detector C Library}} from {{High Level Deep Learning Frameworks}}}, booktitle = {{{IEEE}} 4th {{International Forum}} on {{Research}} and {{Technologies}} for {{Society}} and {{Industry}}, {{RTSI}} 2018 - {{Proceedings}}}, author = {Ranalli, Luca and Di Stefano, Luigi and Plebani, Emanuele and Falchetto, Mirko and Pau, Danilo and D'Alto, Viviana}, year = {2018}, month = nov, publisher = {{Institute of Electrical and Electronics Engineers Inc.}}, doi = {10.1109/RTSI.2018.8548427}, isbn = {978-1-5386-6282-3}, } - Studying the Effects of Feature Extraction Settings on the Accuracy and Memory Requirements of Neural Networks for Keyword SpottingIn IEEE International Conference on Consumer Electronics - Berlin, ICCE-Berlin, Dec 2018

Due to the always-on nature of keyword spotting (KWS) systems, low power consumption micro-controller units (MCU) are the best choices as deployment devices. However, small computation power and memory budget of MCUs can harm the accuracy requirements. Although, many studies have been conducted to design small memory footprint neural networks to address this problem, the effects of different feature extraction settings are rarely studied. This work addresses this important question by first, comparing six of the most popular and state of the art neural network architectures for KWS on the Google Speech-Commands dataset. Then, keeping the network architectures unchanged it performs comprehensive investigations on the effects of different frequency transformation settings, such as number of used mel-frequency cepstrum coefficients (MFCCs) and length of the stride window, on the accuracy and memory footprint (RAM/ROM) of the models. The results show different preprocessing settings can change the accuracy and RAM/ROM requirements significantly of the models. Furthermore, it is shown that DS-CNN outperforms the other architectures in terms of accuracy with a value of 93.47% with least amount of ROM requirements, while the GRU outperforms all other networks with an accuracy of 91.02% with smallest RAM requirements.

@inproceedings{Shahnawaz2018Studying, title = {Studying the Effects of Feature Extraction Settings on the Accuracy and Memory Requirements of Neural Networks for Keyword Spotting}, booktitle = {{{IEEE International Conference}} on {{Consumer Electronics}} - {{Berlin}}, {{ICCE-Berlin}}}, author = {Shahnawaz, Muhammad and Plebani, Emanuele and Guaneri, Ivana and Pau, Danilo and Marcon, Marco}, year = {2018}, month = dec, volume = {2018-Septe}, publisher = {IEEE Computer Society}, issn = {21666822}, doi = {10.1109/ICCE-Berlin.2018.8576243}, isbn = {978-1-5386-6095-9}, }

2017

- Towards Enhancement of Gender Estimation from FingerprintsEmanuela Marasco, Emanuele Plebani, Giang Dao, and Bojan CukicIn IEEE International Workshop on The Bright and Dark Sides of Computer Vision: Challenges and Opportunities for Privacy and Security (CV-COPS ), 2017

Accurate gender prediction brings benefit to several applications. In biometrics, beyond filtering large databases, a gender recognizer can be combined with the output of primary identifiers to increase the recognition accuracy in challenging scenarios (e.g., partial evidence) [1]. In criminal investigation, gender classification may minimize the list of suspects. Although the development of reliable gender estimators is needed, most of the existing approaches are not highly accurate, and often the process is not fully automated. Epidermal ridges are formed during the first three / four months of the gestational period and the resulting ridge configuration remains stable. Ridges and their arrangement, referred to as dermatoglyphics, are determined not only based on environmental factors but also on genetics [2, 3]. In the scientific literature, fingerprints of females are assumed to have thinner epidermal ridge details which leads females having a higher ridge density compared to males. Subsequently, existing methods tend to relate gender determination to a direct measure of the ridge density [4]. However, this approach may not be robust to image degradation (e.g., partial impressions, low quality).

@inproceedings{Marasco2017Enhancement, title = {Towards {{Enhancement}} of {{Gender Estimation}} from {{Fingerprints}}}, booktitle = {{{IEEE International Workshop}} on {{The Bright}} and {{Dark Sides}} of {{Computer Vision}}: {{Challenges}} and {{Opportunities}} for {{Privacy}} and {{Security}} ({{CV-COPS}} )}, author = {Marasco, Emanuela and Plebani, Emanuele and Dao, Giang and Cukic, Bojan}, year = {2017}, pages = {1--2}, address = {Honolulu, Hawaii}, } - Accurate Cyber-Physical System Simulation for Distributed Visual Search ApplicationsIn 2017 IEEE 3rd International Forum on Research and Technologies for Society and Industry (RTSI), Sep 2017

A Cyber-Physical System (CPS) is defined as a, usually distributed, system that links the digital (cyber) and physical world. They feature different computational cores and heterogeneous sensors linked through networks of different types allowing a deeper interaction with the physical world, collecting, storing and exchanging information intelligently. In this work, an open source CPS simulator called COSSIM is described and a smart mechanism is proposed in order to turn it into a co-simulator. In addition to this, a CPS based on a Computer Vision application, called Mobile Visual Search (MVS), is described and ported to COSSIM in order to test the correctness of the simulation and to prove the benefit of the proposed acceleration. Quantitative and qualitative precision results in both real and simulated scenarios are also presented.

@inproceedings{Martino2017Accurate, title = {Accurate Cyber-Physical System Simulation for Distributed Visual Search Applications}, booktitle = {2017 {{IEEE}} 3rd {{International Forum}} on {{Research}} and {{Technologies}} for {{Society}} and {{Industry}} ({{RTSI}})}, author = {Martino, Danilo and Shen, Yun and Paracchini, Marco and Marcon, Marco and Plebani, Emanuele and Pau, Danilo Pietro}, year = {2017}, month = sep, pages = {1--5}, publisher = {IEEE}, address = {Modena}, doi = {10.1109/RTSI.2017.8065917}, isbn = {978-1-5386-3906-1}, } - Embedded Real-Time Visual Search with Visual Distance EstimationIn ICIAP, 2017

Visual Search algorithms are a class of methods that retrieve images by their content. In particular, given a database of reference images and a query image the goal is to find an image among the database that depicts the same object as in the query, if any. Moreover, in many different real case applications more than one object of interest could be viewed in the query image. Furthermore, in this kind of situations, often, it is not sufficient to identify the object depicted on a query image but its precise localization inside the scene viewed by the camera is also requested. In this paper we propose to couple a Visual Search system, which can retrieve multiple objects from the same query image, with an additional Distance Estimation module that exploits the localization information already computed inside the Visual Search stage to estimate localization of the object in three dimensions. In this work we implement the complete image retrieval and spatial localization pipeline (including relative distance estimation) on two different embedded devices, exploiting also their GPU in order to get near real time performances on low-power devices. Lastly, the accuracy of the proposed distance estimation is evaluated on a dataset of annotated query-reference pairs ad-hoc created.

@inproceedings{Paracchini2017, title = {Embedded {{Real-Time Visual Search}} with {{Visual Distance Estimation}}}, booktitle = {{{ICIAP}}}, author = {Paracchini, Marco and Plebani, Emanuele and Iche, Mehdi Ben and Pau, Danilo Pietro and Marcon, Marco}, editor = {Battiato, Sebastiano and Gallo, Giovanni and Schettini, Raimondo and Stanco, Filippo}, year = {2017}, pages = {59--69}, publisher = {Springer International Publishing}, address = {Catania, Italy}, doi = {10.1007/978-3-319-68548-9_6}, isbn = {978-3-319-68548-9}, } - Complexity and Accuracy of Hand-Crafted Detection Methods Compared to Convolutional Neural NetworksValeria Tomaselli, Emanuele Plebani, Mauro Strano, and Danilo PauIn ICIAP, 2017

Even though Convolutional Neural Networks have had the best accuracy in the last few years, they have a price in term of computational complexity and memory footprint, due to a large number of multiply-accumulate operations and model parameters. For embedded systems, this complexity severely limits the opportunities to reduce power consumption, which is dominated by memory read and write operations. Anticipating the oncoming integration into intelligent sensor devices, we compare hand-crafted features for the detection of a limited number of objects against some typical convolutional neural network architectures. Experiments on some state-of-the-art datasets, addressing detection tasks, show that for some problems the increased complexity of neural networks is not reflected by a large increase in accuracy. Moreover, our analysis suggests that for embedded devices hand-crafted features are still competitive in terms of accuracy/complexity trade-offs.

@inproceedings{Tomaselli2017, title = {Complexity and {{Accuracy}} of {{Hand-Crafted Detection Methods Compared}} to {{Convolutional Neural Networks}}}, booktitle = {{{ICIAP}}}, author = {Tomaselli, Valeria and Plebani, Emanuele and Strano, Mauro and Pau, Danilo}, editor = {Battiato, Sebastiano and Gallo, Giovanni and Schettini, Raimondo and Stanco, Filippo}, year = {2017}, pages = {298--308}, publisher = {Springer International Publishing}, address = {Catania, Italy}, doi = {10.1007/978-3-319-68560-1_27}, isbn = {978-3-319-68560-1}, }

2016

- The Orlando Project: A 28 Nm FD-SOI Low Memory Embedded Neural Network ASICGiuseppe Desoli, Valeria Tomaselli, Emanuele Plebani, Giulio Urlini, Danilo Pau, Viviana D’Alto, Tommaso Majo, Fabio De Ambroggi, Thomas Boesch, Surinder-pal Singh, Elio Guidetti, and Nitin ChawlaIn Advanced Concepts for Intelligent Vision Systems, 2016

The recent success of neural networks in various computer vision tasks open the possibility to add visual intelligence to mobile and wearable devices; however, the stringent power requirements are unsuitable for networks run on embedded CPUs or GPUs. To address such challenges, STMicroelectronics developed the Orlando Project, a new and low power architecture for convolutional neural network acceleration suited for wearable devices. An important contribution to the energy usage is the storage and access to the neural network parameters. In this paper, we show that with adequate model compression schemes based on weight quantization and pruning, a whole AlexNet network can fit in the local memory of an embedded processor, thus avoiding additional system complexity and energy usage, with no or low impact on the accuracy of the network. Moreover, the compression methods work well across different tasks, e.g. image classification and object detection.

@inproceedings{Desoli2016, title = {The {{Orlando Project}}: {{A}} 28~Nm {{FD-SOI Low Memory Embedded Neural Network ASIC}}}, booktitle = {Advanced {{Concepts}} for {{Intelligent Vision Systems}}}, author = {Desoli, Giuseppe and Tomaselli, Valeria and Plebani, Emanuele and Urlini, Giulio and Pau, Danilo and D'Alto, Viviana and Majo, Tommaso and De Ambroggi, Fabio and Boesch, Thomas and Singh, Surinder-pal and Guidetti, Elio and Chawla, Nitin}, year = {2016}, pages = {217--227}, publisher = {Springer International Publishing}, address = {Lecce, Italy}, doi = {10.1007/978-3-319-48680-2_20}, } - Accurate Omnidirectional Multi-Camera Embedded Structure from MotionIn Research and Technologies for Society and Industry Leveraging a Better Tomorrow (RTSI), 2016 IEEE 2nd International Forum On, Sep 2016

Trajectory estimation and 3D scene reconstruction from multiple cameras (also referred as Structure from Motion, SfM) will have a central role in the future of automotive industry. Typical appliance fields will be: autonomous navigation/guidance, collisions avoidance against static or moving objects (in particular pedestrians), parking assisted maneuvers and many more. The work exposed in this paper had mainly two different goals: (1) to describe the implementation of a real time embedded SfM modular pipeline featuring a dedicated optimized HW/SW system partitioning. It included also nonlinear optimizations such as local and global bundle adjustment at different stages of the pipeline; (2) to demonstrate quantitatively its performances on a synthetic test space specifically designed for its characterization. In order to make the system reliable and effective, providing the driver or the autonomous vehicle with a prompt response, the data rates and low latency of the 5G communication systems appear to make this choice the most promising communication solution.

@inproceedings{Paracchini2016, title = {Accurate Omnidirectional Multi-Camera Embedded Structure from Motion}, booktitle = {Research and {{Technologies}} for {{Society}} and {{Industry Leveraging}} a Better Tomorrow ({{RTSI}}), 2016 {{IEEE}} 2nd {{International Forum}} On}, author = {Paracchini, Marco and Schepis, Angelo and Marcon, Marco and Falchetto, Mirko and Plebani, Emanuele and Pau, Danilo}, year = {2016}, month = sep, pages = {1--6}, publisher = {IEEE}, doi = {10.1109/RTSI.2016.7740560}, isbn = {978-1-5090-1131-5}, } - Visual Search of Multiple Objects from a Single QueryIn Consumer Electronics-Berlin (ICCE-Berlin), 2016 IEEE 6th International Conference On, 2016

Hundreds of millions of images are uploaded to the cloud every day. Innovative applications able to analyze and extract efficiently information from such a big database are needed nowadays more than ever. Visual Search is an application able to retrieve information of a query image comparing it against a large image database. In this paper a Visual Search pipeline implementation is presented able to retrieve multiple objects depicted in a single query image. Quantitative and qualitative precision results are shown on both real and synthetic datasets.

@inproceedings{paracchini2016visual, title = {Visual {{Search}} of Multiple Objects from a Single Query}, booktitle = {Consumer {{Electronics-Berlin}} ({{ICCE-Berlin}}), 2016 {{IEEE}} 6th {{International Conference}} On}, author = {Paracchini, Marco and Marcon, Marco and Plebani, Emanuele and Pau, Danilo Pietro}, year = {2016}, pages = {41--45}, publisher = {IEEE}, address = {Berlin, Germany}, doi = {10.1109/ICCE-Berlin.2016.7684712}, } - Reduced Memory Region Based Deep Convolutional Neural Network DetectionIn Consumer Electronics-Berlin (ICCE-Berlin), 2016 IEEE 6th International Conference On, Sep 2016

Accurate pedestrian detection has a primary role in automotive safety: for example, by issuing warnings to the driver or acting actively on car’s brakes, it helps decreasing the probability of injuries and human fatalities. In order to achieve very high accuracy, recent pedestrian detectors have been based on Convolutional Neural Networks (CNN). Unfortunately, such approaches require vast amounts of computational power and memory, preventing efficient implementations on embedded systems. This work proposes a CNN-based detector, adapting a general-purpose convolutional network to the task at hand. By thoroughly analyzing and optimizing each step of the detection pipeline, we develop an architecture that outperforms methods based on traditional image features and achieves an accuracy close to the state-of-the-art while having low computational complexity. Furthermore, the model is compressed in order to fit the tight constrains of low power devices with a limited amount of embedded memory available. This paper makes two main contributions: (1) it proves that a region based deep neural network can be finely tuned to achieve adequate accuracy for pedestrian detection (2) it achieves a very low memory usage without reducing detection accuracy on the Caltech Pedestrian dataset.

@inproceedings{tome2016reduced, title = {Reduced {{Memory Region Based Deep Convolutional Neural Network Detection}}}, booktitle = {Consumer {{Electronics-Berlin}} ({{ICCE-Berlin}}), 2016 {{IEEE}} 6th {{International Conference}} On}, author = {Tomé, Denis and Bondi, Luca and Plebani, Emanuele and Baroffio, Luca and Pau, Danilo and Tubaro, Stefano}, year = {2016}, month = sep, pages = {15--19}, publisher = {IEEE}, address = {Berlin, Germany}, doi = {10.1109/ICCE-Berlin.2016.7684706}, }

2015

- Accurate Characterization of Embedded Structure from MotionIn 2015 IEEE 1st International Workshop on Consumer Electronics (CE WS), Mar 2015

Trajectory estimation and 3d scene reconstruction from single camera, e.g. Structure from Motion, is going to have a central role in the future of automotive industry. Typical appliance fields will be: collisions avoidance with any kind of object (people included), parking assisted maneuvers and many more. Indeed various countries are becoming more and more concerned about road traffic safety and therefore through its “Advanced Program”, EuroNCAP rewards vehicle manufacturers who employ Advanced Safety Technologies that assists the driver. This paper had mainly two different goals: (1) to describe the implementation of a state of art Structure from Motion pipeline able to run in real time with embedded fish-eye camera, which includes nonlinear optimization (i.e. local bundle adjustment); (2) to demonstrate quantitatively its performances on a synthetic test space specifically designed for its characterization in term of accuracy.

@inproceedings{Paracchini2015, title = {Accurate Characterization of Embedded {{Structure}} from {{Motion}}}, booktitle = {2015 {{IEEE}} 1st {{International Workshop}} on {{Consumer Electronics}} ({{CE WS}})}, author = {Paracchini, Marco Brando and Marcon, Marco and Pau, Danilo and Falchetto, Mirko and Plebani, Emanuele}, year = {2015}, month = mar, pages = {5--8}, publisher = {IEEE}, doi = {10.1109/CEWS.2015.7867140}, isbn = {978-1-5090-4268-5}, } - RGB-D Visual Search with Compact Binary CodesIn 2015 International Conference on 3D Vision, 2015

As integration of depth sensing into mobile devices is likely forthcoming, we investigate on merging appearance and shape information for mobile visual search. Accordingly, we propose an RGB-D search engine architecture that can attain high recognition rates with peculiarly moderate bandwidth requirements. Our experiments include a comparison to the CDVS (Compact Descriptors for Visual Search) pipeline, candidate to become part of the MPEG-7 standard, and contribute to elucidate on the merits and limitations of joint deployment of depth and color in mobile visual search.

@inproceedings{Petrelli2015a, title = {{{RGB-D Visual Search}} with {{Compact Binary Codes}}}, booktitle = {2015 {{International Conference}} on {{3D Vision}}}, author = {Petrelli, Alioscia and Pau, Danilo and Plebani, Emanuele and Di Stefano, Luigi}, year = {2015}, pages = {82--90}, doi = {10.1109/3DV.2015.17}, isbn = {978-1-4673-8332-5}, } - Training an Object Detector Using Only Positive SamplesIn 2015 IEEE 1st International Workshop on Consumer Electronics (CE WS), Mar 2015

Accurate pedestrian detection has an important role in automotive applications because, by issuing warnings to the driver and acting actively on the car brakes, it can save human lives and decrease the probability of injuries. In order to achieve adequate accuracy, detectors require training sets containing a very large number of negative samples, which can be challenging for the training algorithms of models like support vector machines (SVM). A common approach to deal with such large datasets is Hard Negative Mining (HNM), which avoids working on the full set by growing an active pool of mined samples. A more recent method is the Block-Circulant Decomposition, which achieves the accuracy of HNM at a lower computational cost by reformulating the problem in the Fourier domain. The method however results in additional memory, required during training by the FFT transform, which could be reduced significantly by using only the positive examples. To address the problem, this paper proposes two main contributions: (1) it shows that the circulant decomposition method works with the same performances when only the positive samples are used in the training phase (2) it compares the performance of a detection pipeline based on HOG features trained with either both all negative and positive samples or with only positive samples on the INRIA pedestrian dataset.

@inproceedings{Plebani2015, title = {Training an Object Detector Using Only Positive Samples}, booktitle = {2015 {{IEEE}} 1st {{International Workshop}} on {{Consumer Electronics}} ({{CE WS}})}, author = {Plebani, Emanuele and Celona, Luigi and Pau, Danilo and Karimi, Pegah and Marcon, Marco}, year = {2015}, month = mar, pages = {1--4}, publisher = {IEEE}, doi = {10.1109/CEWS.2015.7867139}, isbn = {978-1-5090-4268-5}, }

2013

- Mixing Retrieval and Tracking Using Compact Visual DescriptorsIn IEEE Third International Conference on Consumer Electronics - Berlin (ICCE-Berlin), Sep 2013

Visual search has seen many improvements over the years, but its application on video content is still an open research problem and it is often limited to still images. Based on the tools devised by the standardization group MPEG CDVS, we developed a processing flow that processes at nearly real-time a video acquired with a low cost imager and performs content search and retrieval of the top match from a local database. To allow efficient interest point detection, we used a GPU accelerated SIFT library. To process the video frames efficiently, we developed a new dataflow processing which allows switching between object searching, retrieving and tracking in order to keep at minimum the number of queries sent to the database. A search into a local database is performed only when no object has been recognized, and once a good match has been found, the algorithm switches to tracking mode.

@inproceedings{Pau2013, title = {Mixing Retrieval and Tracking Using Compact Visual Descriptors}, shorttitle = {Consumer {{Electronics}} ?? {{Berlin}} ({{ICCE-Berlin}}), 2013}, booktitle = {{{IEEE Third International Conference}} on {{Consumer Electronics}} - {{Berlin}} ({{ICCE-Berlin}})}, author = {Pau, Danilo and Buzzella, Alex and Marcon, Marco and Plebani, Emanuele}, year = {2013}, month = sep, pages = {103--107}, publisher = {IEEE}, address = {Berlin, Germany}, doi = {10.1109/ICCE-Berlin.2013.6697963}, isbn = {978-1-4799-1412-8}, }